How bad must it be? Well, just ask John Ray, who's recently rejected essay containing all you ever wanted to know about women, or male-female relationships, can be found right here, and it comes from, well, a self-proclaimed expert, so you know it's true. Nevermind that his whole evolutionary story contradicts what we actually know about male and female sexual behavior, or even the hypothesized evolutionary reasons for what we know, or that his advice seems like it comes from someone who's never actually met a woman. Pay particular attention to the part about most women wanting to be dominated. One wonders whether Mr. Ray has heard of the concept of projection. All in all, it's fascinating in a "back away, slowly" sort of way. And it appears that back away, slowly, is just what Tech Central Station, not exactly known for its intellectual rigour, did.

An entrée of Cognitive Science with an occasional side of whatever the hell else I want to talk about.

Sunday, November 28, 2004

Saturday, November 27, 2004

By Request: Cognitive Science of Humor

Much like creativity in general, the cognitive aspects of humor haven't been widely studied. While general creativity was viewed as difficult to study for reasons related to the view, among scientists at least, that creativity was a relatively rare and isolated phenomenon, I'm not quite sure why humor has been neglected by cognitive scientists. Still, there is some research, some of it bad, some of it good, mostly arising out of the field of computational linguistics. The general finding is that humans are funny, while computational linguists are not. Aaaanyway, I'm going to try to describe a few of the psychological theories of humor in this post. Humor is by no means my area of expertise, so if I miss something, and someone out there notices it, please let me know. As you're probably aware, humor has two primary aspects, a cognitive one and an affective one. Here I'm going to deal primarily with the cognitive aspect, and therefore won't be getting into issues related to things like the health benefits of humor and laughter.

The best place to start, with humor, is the brain. I'm not always comfortable talking about cognitive neuroscience, because there's so much bad cognitive neuroscience research, and because, as my neuroscientist friends are fond of reminding me, I'm not a neuroscientist, but in this case, the neuroscience will help situate the subsequent discussion at the representational level. When we hear a joke, brain activation follows a predictable sequence. Depending on the type of joke, different areas of the left hemisphere associated with language processing, and in some cases, ambiguity resolution are activated first. These include the left and right posterior middle temporal gyrus and the left posterior inferior temporal gyrus for semantic jokes, and the left posterior inferior temporal gyrus and left inferior frontal gyrus, areas associated with phonological processing, for puns1. After the joke is processed cognitively in these cortical areas, another set of brain regions, primarily subcortical, begin to become active. These include the nucleus accumbens and the ventral tegmental area, both part of the mesolimbic pathway, which is associated with things like addiction. Activation is also seen in the amygdala, a brain structure associated with emotion2.

There's not going to be a test on the brain structures, but there are three important lessons to be gleaned from the neuroscience research on humor. They are:

The earliest modern psychological theory of humor, of course, was Freud's. As you might expect, Freud thought humor had to do with sex (for more on Freud's theory of humor, see here). Later theories saw humor as a means of disparagement, or reaffirming superiority. Today, most theories, particularly in cognitive science, involve incongruity resolution (Freudian theories, usually called arousal-relief, or just relief theories, are still used by some nonscientists, as are disparagement theories). The gist of the incongruity-resolution account of humor is that we find things humorous when they involve the combination of incongruous parts. Here's an often quoted description of this theory:3:

In Raskin's theory, humor involves the activation of two opposing scripts, such as sex/no sex, good/bad, money/no money, possible/impossible, real/unreal, etc. Humor arises when one of two opposing scripts is activated, followed by the activation of the second opposing script, creating ambiguity. Thus there are three stages. In the first stage, one script (or schema) is activated. In the second stage, information that is incongruent with that schema is activated, creating ambiguity. In the final stage, the ambiguity is resolved. To see how this works, here is an example used by Raskin:

In recent years, various reworkings of Raskin's theory by other researchers have been proposed. For instance, in cognitive linguistics, Coulson7 has proposed a theory in which two incongruous mental spaces are activated at once, and resolved in the blend. Veatch has proposed a theory with three components:

In a set of experiments, Vaid et al.8 presented participants with visually presented verbal jokes, and primed the two opposing meanings at different times during the presentation, either after reading the set-up, in the middle of the joke, or after the punchline had been read. Most incongruincy-resolution models would predict that, initially, only the set-up meaning would be activated, while at some point after encountering the incongruous information, both meanings would be active at the same time, forcing a resolution. Vaid et al.'s priming data supported this view. When primes were given at the beginning of the joke, only the first meaning showed a priming effect, indicating that only it, and not the second, incongruous meaning, was active. At the intermediate position, both the first and second meanings showed priming effects, indicating that they were both active simultaneously. Finally, after viewing the joke for an extended time, only the second meaning was active, indicating that a resolution had been found. Thus, the three-stages of the various incongruincy-resolution models based on Raskin's Script-based Theory, the first script activation, followed by the activation of a second script, which creates ambiguity, and finally ambiguity-resolution, were all empirically supported.

Another area of research that might be relevant for cognitive theories of humor is research on schematic memory. Since most cognitive theories of humor involve the activation of incongruous schemas, this seems straightforward. Still, much of the schematic memory research has been ignored by humor theorists. For instance, Anderson and Pichert9 showed that when information incongruous with a currently activated schema, but congruent with another schema, is read, the new schema is activated. Furthermore, information associated with a schema that is not active during comprehension tends to be inaccessible, meaning that the conflict would not arise until both schemas were activated, and that once the conflict is resolved, and only the second schema is active, information associated with the first schema would not be accessible10. This is consistent with the fact that, in the Vaid et al. studies, only the second meaning was active several moments after the punchline had been read. It's also consistent with research showing that, despite the fact that people tend to spend more time attending to the joke set-up during the initial processing of the joke (a fact that is likely indicative of attempts to resolve ambiguity between the set-up and punchline), participants are much more likely to remember the punchline of a joke than the set-up11.

Finally, because in most incongruity-resolution theories of humor, jokes are said to involve the alignment of opposing scripts or schemas, humor research could benefit from the vast literature on comparisons, and inter-domain mappings in particular. Coulson's blending theory, for instance, is one attempt to use mappings in a theory of humor. Other, more empirically supported theories of mapping should probably be looked at when composing complete theories of humor as well.

So, there you have it, what constitutes either more than you ever wanted to know about cognitive theories of humor, or barely enough to satisfy your appetite for knowledge, depending on how interested you are in the topic. I find it interesting for reasons I've hinted at in the last two paragraphs. Humor is closely related to many more general cognitive phenomena, such as schema-activation and memory, and mappings between domains. Hopefully then, researchers will begin to explore humor, as they have recently begun to explore creativity in general, more thoroughly in the near future, because it is likely that many important insights about cognition in general can be gleaned from how people produce and comprehend humor. I'll leave you with a joke. You can apply what you've learned to it, and see if the theory can account for it. It's the shortest joke ever: Two Irish guys walk out of a bar.

1 Goel V., Dolan R. J. (2001). The functional anatomy of humor: segregating cognitive and affective components. Nature Neuroscience, 4(3), 237-8.

2 Mobbs, D.; Greicius, M. D.l Abdel-Azim, E.; Menon, V.; Reiss, A. L. (2003). Humor modulates the mesolimbic reward centers. Neuron, 40(5), 1041-8.

3 Beattie, J. (1776) . An essay on laughter, and ludicrous composition. In Essays. William Creech, Edinburgh, Reprinted by Garland, New York, 1971. Quoted in Raskin, V. (1985). Semantic Mechanisms of Humour. Reidel, Dordrecht, 1985.

4 Ritchie, G. (1999). Developing the incongruity-resolution theory. Proceedings of the AISB Symposium on Creative Language: Stories and Humour, Edinburgh.

5 Raskin (1985). See note 3.

6 Attardo, S. (1993) Linguistic Theories of Humor, Berlin: Mouton de Gruyter.

7 Coulson, S. (2001). What's so funny: Conceptual blending in humorous examples. In Herman, V. (Ed.), The poetics of cognition: Studies of cognitive linguistics and the verbal arts. Cambridge: Cambridge University Press.

8 Vaida, J; Hulla, R.; Herediab, R.; Gerkensa, D., & Martinez, F. (2003). Getting a joke: the time course of meaning activation in verbal humor. Journal of Pragmatics, 35, 1431-1449.

9 Anderson, R., and Pichert, J. (1978). Recall of previously unrecallable information following a shift in perspective. Journal of Verbal Learning and Verbal Behavior, 17, 1-12.

10 Stilwell, C. H. & Markman, A. B. (2001). The fate of irrelevant information in analogical mapping. Paper presented at the 23rd annual meeting of the Cognitive Science Society, Edinburgh, Scotland.

11 Mitchell, H., & Graesser, A.C. (2003). Investigating conceptually driven processing in humor: The effects of context on jokes. Paper presented at the 15th International Society for Humor Studies. Northern Illinois University

The best place to start, with humor, is the brain. I'm not always comfortable talking about cognitive neuroscience, because there's so much bad cognitive neuroscience research, and because, as my neuroscientist friends are fond of reminding me, I'm not a neuroscientist, but in this case, the neuroscience will help situate the subsequent discussion at the representational level. When we hear a joke, brain activation follows a predictable sequence. Depending on the type of joke, different areas of the left hemisphere associated with language processing, and in some cases, ambiguity resolution are activated first. These include the left and right posterior middle temporal gyrus and the left posterior inferior temporal gyrus for semantic jokes, and the left posterior inferior temporal gyrus and left inferior frontal gyrus, areas associated with phonological processing, for puns1. After the joke is processed cognitively in these cortical areas, another set of brain regions, primarily subcortical, begin to become active. These include the nucleus accumbens and the ventral tegmental area, both part of the mesolimbic pathway, which is associated with things like addiction. Activation is also seen in the amygdala, a brain structure associated with emotion2.

There's not going to be a test on the brain structures, but there are three important lessons to be gleaned from the neuroscience research on humor. They are:

- The cognitive and affective components of jokes are reflected in the different brain areas that are activated, with humor activating areas used for language processing, reward, and emotion.

- The time course of activation, as we would expect, goes from cognitive areas to affective areas.

- Some of the cognitive areas activated by jokes are associated with ambiguity resolution. The importance of this will become clear in a bit.

The earliest modern psychological theory of humor, of course, was Freud's. As you might expect, Freud thought humor had to do with sex (for more on Freud's theory of humor, see here). Later theories saw humor as a means of disparagement, or reaffirming superiority. Today, most theories, particularly in cognitive science, involve incongruity resolution (Freudian theories, usually called arousal-relief, or just relief theories, are still used by some nonscientists, as are disparagement theories). The gist of the incongruity-resolution account of humor is that we find things humorous when they involve the combination of incongruous parts. Here's an often quoted description of this theory:3:

Laughter arises from the view of two or more inconsistent, unsuitable, or incongruous parts or circumstances, considered as united in one complex object or assemblage, or as acquiring a sort of mutual relation from the peculiar manner in which the mind takes notice of them.Graeme Ritchie, a computational linguist, has distinguished two types of incongruity-resolution theories prominent in the literature, the surprise disambiguation and two-stage types, and describes them as follows4:

Surprise disambiguation: The set-up has two different interpretations, but one is much more obvious to the audience, who does not become aware of the other meaning. The meaning of the punchline conflicts with this obvious interpretation, but is compatible with, and even evokes, the other, hitherto hidden, meaning.Ritchie includes the most popular theory today, Raskin's Semantic Script-based Theory of Humor5, as a version of the surprise disambiguation view of humor. Because it is the most influential incongruity-resolution theory today, I'll focus on it in elaborating on the incongruity-resolution view.

Two-stage: The punchline creates incongruity, and then a cognitive rule must be found which enables the content of the punchline to follow naturally from the information established in the set-up. (p. 2)

In Raskin's theory, humor involves the activation of two opposing scripts, such as sex/no sex, good/bad, money/no money, possible/impossible, real/unreal, etc. Humor arises when one of two opposing scripts is activated, followed by the activation of the second opposing script, creating ambiguity. Thus there are three stages. In the first stage, one script (or schema) is activated. In the second stage, information that is incongruent with that schema is activated, creating ambiguity. In the final stage, the ambiguity is resolved. To see how this works, here is an example used by Raskin:

The first thing that strikes a stranger in New York is a big car.According to Raskin, this sentence is processed in such a way that the meaning of the word "strikes" which is first activated is the one indicating surprise, followed by the activation of the collision meaning of "strikes" after reading "a big car." With these two meanings active at the same time, ambiguity is created, and it is the resolution of this ambiguity, or incongruity, which causes the sentence to be humorous. In a more recent version of Raskin's theory, called the General Theory of Verbal Humor6, the incongruincy-resolution aspect of the theory is accompanied by "knowledge resources" designed to allow for the influence of things like context, reasoning processes, text-comprehension processes, etc. Still, the primary aspect of humorous texts/situations which distinguishes them from non-humorous ones is the ambiguity, or incongruity, which must be resolved.

In recent years, various reworkings of Raskin's theory by other researchers have been proposed. For instance, in cognitive linguistics, Coulson7 has proposed a theory in which two incongruous mental spaces are activated at once, and resolved in the blend. Veatch has proposed a theory with three components:

Veatch describes the gist of the theory this way:

1) V Something is wrong. That is, the perceiver thinks that

things in the situation ought to be a certain way -- and

cares about it -- and that is Violated.2) N The situation is actually okay. That is, the perceiver has

in mind a predominating view of the situation as being Normal.3) Simultaneity Both occur at the same time. That is, the N and V understandings

are present in the mind of the perceiver at the same instant.

So humor is emotional pain that doesn't actually hurt. Or a violation that you care about, overlaid with the conviction that everything is normal (either good or neutral, but not bad).Unlike Raskin and most others, Veatch's theory is explicitly designed to account for nonverbal humor. In fact, one of his favorite examples is peekaboo. Also, it is meant to account for the affective components of humor, which are dealt with only cursorily in most other incongruity-resolution theories. Still, Veatch is a linguist, like the other theorists mentioned so far, and thus he has yet to test his theory empirically. In fact, because the cognitive scientists who've constructed theories of humor have almost all been linguists, there has been very little empirical testing of even the basic assumptions of these theories, such as the activation of competing scripts/schemas, or the need for the resolution of ambiguity. I, on the other hand, am not a linguist, and my first inclination is to look for data. So, I searched and searched, and found some. I'll briefly describe one set of experiments related to the incongruity-resolution models discussed so far, though it will not provide any evidence to decide which theory is better. I'll then describe some research in other areas of cognition that might be relevant to theories of humor, but which hasn't been incorporated into the theories thus far.

In a set of experiments, Vaid et al.8 presented participants with visually presented verbal jokes, and primed the two opposing meanings at different times during the presentation, either after reading the set-up, in the middle of the joke, or after the punchline had been read. Most incongruincy-resolution models would predict that, initially, only the set-up meaning would be activated, while at some point after encountering the incongruous information, both meanings would be active at the same time, forcing a resolution. Vaid et al.'s priming data supported this view. When primes were given at the beginning of the joke, only the first meaning showed a priming effect, indicating that only it, and not the second, incongruous meaning, was active. At the intermediate position, both the first and second meanings showed priming effects, indicating that they were both active simultaneously. Finally, after viewing the joke for an extended time, only the second meaning was active, indicating that a resolution had been found. Thus, the three-stages of the various incongruincy-resolution models based on Raskin's Script-based Theory, the first script activation, followed by the activation of a second script, which creates ambiguity, and finally ambiguity-resolution, were all empirically supported.

Another area of research that might be relevant for cognitive theories of humor is research on schematic memory. Since most cognitive theories of humor involve the activation of incongruous schemas, this seems straightforward. Still, much of the schematic memory research has been ignored by humor theorists. For instance, Anderson and Pichert9 showed that when information incongruous with a currently activated schema, but congruent with another schema, is read, the new schema is activated. Furthermore, information associated with a schema that is not active during comprehension tends to be inaccessible, meaning that the conflict would not arise until both schemas were activated, and that once the conflict is resolved, and only the second schema is active, information associated with the first schema would not be accessible10. This is consistent with the fact that, in the Vaid et al. studies, only the second meaning was active several moments after the punchline had been read. It's also consistent with research showing that, despite the fact that people tend to spend more time attending to the joke set-up during the initial processing of the joke (a fact that is likely indicative of attempts to resolve ambiguity between the set-up and punchline), participants are much more likely to remember the punchline of a joke than the set-up11.

Finally, because in most incongruity-resolution theories of humor, jokes are said to involve the alignment of opposing scripts or schemas, humor research could benefit from the vast literature on comparisons, and inter-domain mappings in particular. Coulson's blending theory, for instance, is one attempt to use mappings in a theory of humor. Other, more empirically supported theories of mapping should probably be looked at when composing complete theories of humor as well.

So, there you have it, what constitutes either more than you ever wanted to know about cognitive theories of humor, or barely enough to satisfy your appetite for knowledge, depending on how interested you are in the topic. I find it interesting for reasons I've hinted at in the last two paragraphs. Humor is closely related to many more general cognitive phenomena, such as schema-activation and memory, and mappings between domains. Hopefully then, researchers will begin to explore humor, as they have recently begun to explore creativity in general, more thoroughly in the near future, because it is likely that many important insights about cognition in general can be gleaned from how people produce and comprehend humor. I'll leave you with a joke. You can apply what you've learned to it, and see if the theory can account for it. It's the shortest joke ever: Two Irish guys walk out of a bar.

1 Goel V., Dolan R. J. (2001). The functional anatomy of humor: segregating cognitive and affective components. Nature Neuroscience, 4(3), 237-8.

2 Mobbs, D.; Greicius, M. D.l Abdel-Azim, E.; Menon, V.; Reiss, A. L. (2003). Humor modulates the mesolimbic reward centers. Neuron, 40(5), 1041-8.

3 Beattie, J. (1776) . An essay on laughter, and ludicrous composition. In Essays. William Creech, Edinburgh, Reprinted by Garland, New York, 1971. Quoted in Raskin, V. (1985). Semantic Mechanisms of Humour. Reidel, Dordrecht, 1985.

4 Ritchie, G. (1999). Developing the incongruity-resolution theory. Proceedings of the AISB Symposium on Creative Language: Stories and Humour, Edinburgh.

5 Raskin (1985). See note 3.

6 Attardo, S. (1993) Linguistic Theories of Humor, Berlin: Mouton de Gruyter.

7 Coulson, S. (2001). What's so funny: Conceptual blending in humorous examples. In Herman, V. (Ed.), The poetics of cognition: Studies of cognitive linguistics and the verbal arts. Cambridge: Cambridge University Press.

8 Vaida, J; Hulla, R.; Herediab, R.; Gerkensa, D., & Martinez, F. (2003). Getting a joke: the time course of meaning activation in verbal humor. Journal of Pragmatics, 35, 1431-1449.

9 Anderson, R., and Pichert, J. (1978). Recall of previously unrecallable information following a shift in perspective. Journal of Verbal Learning and Verbal Behavior, 17, 1-12.

10 Stilwell, C. H. & Markman, A. B. (2001). The fate of irrelevant information in analogical mapping. Paper presented at the 23rd annual meeting of the Cognitive Science Society, Edinburgh, Scotland.

11 Mitchell, H., & Graesser, A.C. (2003). Investigating conceptually driven processing in humor: The effects of context on jokes. Paper presented at the 15th International Society for Humor Studies. Northern Illinois University

Wednesday, November 24, 2004

Creative Cognition: Ordinary, Observable, and Unconscious

Recently, cognitive psychologists have begun to take a serious look at creativity in human cognition, and to study it empirically. Under traditional views of creativity, this is a daunting task, because creativity is viewed as something mysterious and extraordinary. However, researchers using what is now called the creative cognition approach1 argue that this traditional view of creativity is wrong. Rather than being instances of extraordinary, or unusual cognitive processes, human creativity, even in its most striking forms, utilizes ordinary cognitive processes. Thus, the difference between mundane, everyday acts of creativity, and acts of extreme creativity is one of degree, rather than kind. Adopting this view allows researchers to study creative phenomena in laboratory settings, using the methods of cognitive psychology, and to carry over knowledge from existing cognitive theories. In this post, I want to briefly outline the creative cognition approach, as presented in Fink, Ward, and Smith's Creative Cognition: Theory, Research, and Applications2 and Ward, Smith, and Finke (1999). In a subsequent post, I will discuss some of the empirical findings generated from this approach.

The creative cognition approach is built around the Geneplore model3, and describes two types of processes involved in creative cognition: generative processes and exploratory processes. Generative processes are those that most of us think about when we think of creativity. They are the processes by which creative concepts are first born. These processes are highly visible in extreme acts of creativity, but they are also evident in ordinary, everyday cognition. For instance, concepts are products of generative creative processes. As Ward, et al. (1999) write:

Exploratory processes are just that, processes used to explore the structures produced by generative processes. Examples of exploratory processes given by Ward, et al. include searching retrieved structures for "novel attributes," searching for "metaphorical implications," searching for possible functions, "the evaluation of structures from different perspectives or within different contexts," interpretation of structures from the perspective of the problem(s) to be solved, and "the search for various practical or conceptual limitations that are suggested by the structures" (p. 192).

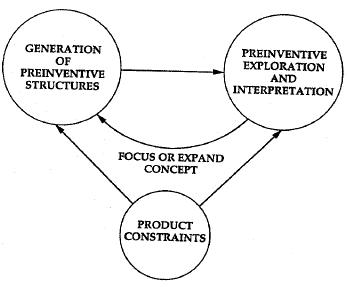

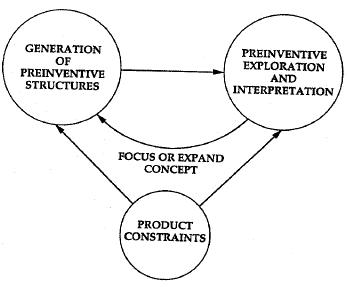

As you might imagine, it will sometimes be difficult to distinguish between generative and exploratory processes, in practice. The two types of processes interact in a dynamic fashion. In some cases, generative processes may be used to produce a novel idea, after which exploratory processes will disover potentially important limits to the utility of that idea. Generative processes will then produce a new novel idea based on the findings of the exploratory processes, and so on, until a desired solution or otherwise acceptible final structure is arrived at. The complex interactions between generative processes, exploratory processes, and context is presented in the following diagram from Ward, et al.:

Figure 10.1 from Ward, et al. (p. 193). The following is their caption:The basic structure of the Geneplore model. Preinventive structures are constructed during an initial, generative phase, and are interpreted during an exploratory phase. The resulting creative insights c an then be focused on specific issues or problems, or expanded conceptually, by modifying the preinventive structures and repeating the cycle. Constraints on the final product can be imposed at any time during the generative or exploratory phase.

One other interesting aspects of the Geneplore model is that most of its processes occur unconsciously, or below the level of awareness. This is particularly true of the generative processes, but also for many of the exploratory processes listed above. For example, the processes involved in analogical mapping occur largely unconsciously, and more often than not, memory retrieval is an automatic process born of cues in the environment. Furthermore, there is evidence that conscious thought, including (and perhaps especially) language, may actually inhibit generative processes. The unconscious nature of many (if not most) of the cognitive processes involved in creativity call into question the many anecdotal accounts of sudden insight and the production of creative ideas that have often fueled the belief that creativity is something mysterious and not amenable to careful empirical study. This does not mean that we can't consciously influence the outcome of creative processes. Exploratory processes can often be used quite deliberately, and even generative processes can benefit from conscious attention to problems and potential solutions. As Pasteur once said, "Chance favors the prepared mind."

So there you have it, the basics of the creative cognition approach. Over the last decade or so, researchers using this approach have produced a great deal of empirical research, with a wide arrange of findings. In the next post on creative cognition, I'll discuss some of these findings, and describe their implications for other disciplines, including science in general and literary theory.

1 Ward, T. B., Smith, S. M., & Finke, R. A. (1999). Creative cognition. In R. J. Sternberg (Ed.), Handbook of creativity. Cambridge: Cambridge University Press.

2 See also The Creative Cognition Approach and the chapter cited in footnote 1.

3 First described in Creative Cognition: Theory, Research, and Applications.

The creative cognition approach is built around the Geneplore model3, and describes two types of processes involved in creative cognition: generative processes and exploratory processes. Generative processes are those that most of us think about when we think of creativity. They are the processes by which creative concepts are first born. These processes are highly visible in extreme acts of creativity, but they are also evident in ordinary, everyday cognition. For instance, concepts are products of generative creative processes. As Ward, et al. (1999) write:

The mere fact that we readily construct a vast array of concrete and abstract concepts from an ongoing stream of otherwise discrete experiences implies a striking generative ability; concepts are creations. (p. 190)A wide range of ordinary cognitive processes can be used in the service of generating novel ideas. Examples from Ward et al. include memory retrieval, assocation formation among information retrieved from memory, combinations of structures retrieved from memory, the synthesis of new structures, the transformation of retrieved structures into "new forms," analogical transfer between domains, and "categorical reduction," which involves reducing existing structures to "more primitive constituents"(p. 191-2). One type of structure produced by generative processes is called a preinventive structure. Preinventive structures are, in essence, the "germs" of creative ideas. Ultimately, they may not resemble the final product of the creative process, but they are the ideas that get the ball rolling, and they can be created through any of the processes mentioned above, or through other ordinary cognitive processes.

Exploratory processes are just that, processes used to explore the structures produced by generative processes. Examples of exploratory processes given by Ward, et al. include searching retrieved structures for "novel attributes," searching for "metaphorical implications," searching for possible functions, "the evaluation of structures from different perspectives or within different contexts," interpretation of structures from the perspective of the problem(s) to be solved, and "the search for various practical or conceptual limitations that are suggested by the structures" (p. 192).

As you might imagine, it will sometimes be difficult to distinguish between generative and exploratory processes, in practice. The two types of processes interact in a dynamic fashion. In some cases, generative processes may be used to produce a novel idea, after which exploratory processes will disover potentially important limits to the utility of that idea. Generative processes will then produce a new novel idea based on the findings of the exploratory processes, and so on, until a desired solution or otherwise acceptible final structure is arrived at. The complex interactions between generative processes, exploratory processes, and context is presented in the following diagram from Ward, et al.:

Figure 10.1 from Ward, et al. (p. 193). The following is their caption:The basic structure of the Geneplore model. Preinventive structures are constructed during an initial, generative phase, and are interpreted during an exploratory phase. The resulting creative insights c an then be focused on specific issues or problems, or expanded conceptually, by modifying the preinventive structures and repeating the cycle. Constraints on the final product can be imposed at any time during the generative or exploratory phase.

One other interesting aspects of the Geneplore model is that most of its processes occur unconsciously, or below the level of awareness. This is particularly true of the generative processes, but also for many of the exploratory processes listed above. For example, the processes involved in analogical mapping occur largely unconsciously, and more often than not, memory retrieval is an automatic process born of cues in the environment. Furthermore, there is evidence that conscious thought, including (and perhaps especially) language, may actually inhibit generative processes. The unconscious nature of many (if not most) of the cognitive processes involved in creativity call into question the many anecdotal accounts of sudden insight and the production of creative ideas that have often fueled the belief that creativity is something mysterious and not amenable to careful empirical study. This does not mean that we can't consciously influence the outcome of creative processes. Exploratory processes can often be used quite deliberately, and even generative processes can benefit from conscious attention to problems and potential solutions. As Pasteur once said, "Chance favors the prepared mind."

So there you have it, the basics of the creative cognition approach. Over the last decade or so, researchers using this approach have produced a great deal of empirical research, with a wide arrange of findings. In the next post on creative cognition, I'll discuss some of these findings, and describe their implications for other disciplines, including science in general and literary theory.

1 Ward, T. B., Smith, S. M., & Finke, R. A. (1999). Creative cognition. In R. J. Sternberg (Ed.), Handbook of creativity. Cambridge: Cambridge University Press.

2 See also The Creative Cognition Approach and the chapter cited in footnote 1.

3 First described in Creative Cognition: Theory, Research, and Applications.

Tuesday, November 23, 2004

My Best Pharyngula Impression

OK, so I'm no PZ Myers. Hell, I'm not even a biologist. That doesn't mean I can't post about evolution, does it? Well, I guess it does, so I'm not even going to try to write something about evolution research. Instead, I'm just going to refer you to a paper that I found very interesting when I read it a couple years ago. I'm not really qualified to evaluate the paper's claims, so I won't even do that. You'll have to check out "Bayesian natural selection and the evolution of perceptual systems" for yourself. Here's a passage from the introduction, just to give you an idea of what the paper is all about:

Here we show that a constrained form of Bayesian statistical decision theory provides an appropriate framework for exploring the formal link between the statistics of the environment and the evolving genome. The framework consists of two components. One is a Bayesian ideal observer with a utility function appropriate for natural selection. The other is a Bayesian formulation of natural selection that neatly divides natural selection into several factors that are measured individually and then combined to characterize the process as a whole. In the Bayesian formulation, each allele vector (i.e. each instance of a polymorphism) in each species under consideration is represented by a fundamental equation, which describes how the number of organisms carrying that allele vector at time t + 1 is related to: (i) the number of organisms carrying that allele vector at time t; (ii) the prior probability of a state of the environment at time t; (iii) the likelihood of a stimulus given the state of the environment; (iv) the likelihood of a response given the stimulus; and (v) the birth and death rates given the response and the state of the environment. The process of natural selection is represented by iterating these fundamental equations in parallel over time, while updating the allele vectors using appropriate probability distributions for mutation and sexual recombination.Enjoy.

Our proposal draws upon two important research traditions in sensation and perception: ideal observer theory (De Vries 1943; Rose 1948; Peterson et al. 1954; Barlow 1957; Green & Swets 1966) and probabilistic functionalism (Brunswik & Kamiya 1953; Brunswik 1956). After reviewing these two research traditions, we motivate and derive the basic formulae for maximum fitness ideal observers and Bayesian natural selection. We then demonstrate the Bayesian approach by simulating the evolutionof camouflage in passive organisms and the evolution of two-receptor sensory systems in active organisms that search for prey. Although we describe Bayesian natural selection in the context of perceptual systems, the approach is quite general and should be appropriate for systems ranging from molecular mechanisms within cells to the behaviour of organisms.

Monday, November 22, 2004

Late Night Generalizations About Philosophers' Intuitions

With all the talk of intuitions in philosophy (here's the latest installment), and particularly the fear that the intuitions of western academic philosophers may not be shared by members of other cultures, or even nonphilosopher members of the same culture, it's easy to begin to wonder about the validity of many philosophical positions, or at least how to save them from the problem of unshared intuitions. I myself think we should be skeptical about intuitions regardless of whether they're shared across members of different cultures. The reason is that no matter how hard philosophers try, and no matter how clean their intuitions are rendered through the process of making them explicit and filtering them through the sieve of social and academic review, so much of what goes into the production of those intuitions is unconscious and unavailable to introspection. The very idea that through this filtering is possible reminds me of the hubris Nietzsche remarks in his essay "On the Prejudices of the Philosophers," where he writes:

There's an upshot to all of this. When I changed disciplines from philosophy to psychology long ago, I used to get into heated discussions about the value of analytic philosophy with my graduate advisor. One of his most frequent remarks was that the biggest difference between philosophers and experimental psychologists is that psychologists are beholding to data. There's something naive about philosophers believing that their intuitions are somehow getting to the heart of the issues, that their intuitions are somehow direct, immediate, or otherwise unreasoned (but not unreasonable) impressions of or responses to the (often counterfactual) scenarios they explore. Regardless of how sophisticated the logical and conceptual tools they may have at their disposal, philosophers are subject to the personal and social limitations idiosyncracies that come with human cognition. And since all that philosophers are beholding to, in most cases, is their own intuitions and those of other philosophers (members of a community that shares at least some relevant knowledge structures) there's no real way to independently test the validity of philosophical positions. If you want to know how something like reference works, you go out and you look at how people use language to refer. That doesn't entail exploring one's own intuitions. Psychologists realized the ineffectiveness of this sort of introspection 100 years ago. Instead, in entails going out and systematically gathering data. It's true that scientists, too, are shackled by their cognitive makeup, but that's the beauty of data - it constrains the range of possible intuitions that one can accept, and the more data one gathers, the fewer intuitions on can reasonable accept.

Obviously there are areas of philosophy where this method won't work. For example, you can't develop a meta-ethical theory, particularly one that's meant to be normative rather than purely descriptive, by going out and looking at peoples' moral reactions to situations. In this case, your goal isn't to understand how people behave, but to come up with theories about how they should behave regardless of how they actually do. However, there are intuitions that go into any moral theory that could benefit from some good data. For instance, any descriptive or moral theory of ethics will have to take into account facts about the human mind, and how it acts in the types of situations (particularly in social situations) that ethical theorists are interested in.Thus in some cases, like theories of reference, philosophy can best be used as a sort of hypothesis-generator, which then passes its hypotheses along for empirical investigation. In other cases, like the case of moral theorizing, philosophy produces the ultimate theories, but it should use knowledge from empirical investigations to gain insight into the potential structures of moral theories. Any philosophy that relies exclusively on intuitions, however, is doomed to be little more than parlor games. As Fodor says in his review, "Is that a way for grown-ups to spend their time?"

Collectively they take up a position as if they had discovered and reached their real opinions through the self-development of a cool, pure, god-like disinterested dialectic (in contrast to the mystics of all ranks, who are more honestly stupid with their talk of "inspiration"—), while basically they defend with reasons sought out after the fact an assumed principle, an idea, an "inspiration," for the most part some heart-felt wish which has been abstracted and sifted.Perhaps Nietzsche is a bit harsh, but I can't escape the impression that philosophers' intuitions, like those Kripke and the philosophers who have followed him seem to share about things like reference (as discussed in Fodor's much-blogged about review) are, when they are made explicit, nothing more than post hoc rationalizations of the positions in which our largely unconscious and unexamined conceptual schemes place us in relation to the problems the philosophers are raising. If this is the case, then it's not surprising that members of cultures with conceptual schemes that vary in significant and relevant ways would have different "intuitions" when confronted with those problems. However, these cultural differences only hint at the real problem with intuitions, a problem which is made even more clear by the possibility of members of the same culture failing to share those of philosophers. What this implies is that philosophers' intuitions are a product of their specialized knowledge structures gained through training and reading related to the philosophical issues in question.

There's an upshot to all of this. When I changed disciplines from philosophy to psychology long ago, I used to get into heated discussions about the value of analytic philosophy with my graduate advisor. One of his most frequent remarks was that the biggest difference between philosophers and experimental psychologists is that psychologists are beholding to data. There's something naive about philosophers believing that their intuitions are somehow getting to the heart of the issues, that their intuitions are somehow direct, immediate, or otherwise unreasoned (but not unreasonable) impressions of or responses to the (often counterfactual) scenarios they explore. Regardless of how sophisticated the logical and conceptual tools they may have at their disposal, philosophers are subject to the personal and social limitations idiosyncracies that come with human cognition. And since all that philosophers are beholding to, in most cases, is their own intuitions and those of other philosophers (members of a community that shares at least some relevant knowledge structures) there's no real way to independently test the validity of philosophical positions. If you want to know how something like reference works, you go out and you look at how people use language to refer. That doesn't entail exploring one's own intuitions. Psychologists realized the ineffectiveness of this sort of introspection 100 years ago. Instead, in entails going out and systematically gathering data. It's true that scientists, too, are shackled by their cognitive makeup, but that's the beauty of data - it constrains the range of possible intuitions that one can accept, and the more data one gathers, the fewer intuitions on can reasonable accept.

Obviously there are areas of philosophy where this method won't work. For example, you can't develop a meta-ethical theory, particularly one that's meant to be normative rather than purely descriptive, by going out and looking at peoples' moral reactions to situations. In this case, your goal isn't to understand how people behave, but to come up with theories about how they should behave regardless of how they actually do. However, there are intuitions that go into any moral theory that could benefit from some good data. For instance, any descriptive or moral theory of ethics will have to take into account facts about the human mind, and how it acts in the types of situations (particularly in social situations) that ethical theorists are interested in.Thus in some cases, like theories of reference, philosophy can best be used as a sort of hypothesis-generator, which then passes its hypotheses along for empirical investigation. In other cases, like the case of moral theorizing, philosophy produces the ultimate theories, but it should use knowledge from empirical investigations to gain insight into the potential structures of moral theories. Any philosophy that relies exclusively on intuitions, however, is doomed to be little more than parlor games. As Fodor says in his review, "Is that a way for grown-ups to spend their time?"

Friday, November 19, 2004

A Connectionist Model of Metaphor

In my posts on metaphor (I, II, III, and IV), I focused primarily on theories of metaphor, with some empirical work thrown in for good measure. I didn't discuss any of the many models that implement these theories, primarily because there are so many. Today I was reading a paper on a connectionist model, called the Metaphor by Pattern Completion (MPC) model (Thomas & Mareschal, 1999), that implements the attributive categorization model (see posts III and IV), and I thought it might be interesting to give a quick description of it.

The model uses a pretty normal three-level connectionist architecture (see here for a good primer on connectionism), consisting of input and output nodes, along with intervening hidden nodes. The model was trained with exemplars from three separate categories (Apple, Fork, and Ball), and the representations of these three categories are stored in the hidden units. Here is a graphic representation of the architecture:

Figure 1 from Thomas and Mareschal (1999). Click for larger view.

Given an exemplar, the model autoassociates the features that comprise the representation of the category to which the exemplar belongs. This just means that the model reproduces the features, in the form of semantic vectors, of the category as output. So, given an exemplar with a set of features, the model will determine into which category the exemplar belongs, and output the features of that category. To model metaphors, an exemplar serving as the topic of the metaphor is inputted into the node(s) representing the category which serves as the vehicle. For instance, the metaphor "An apple is a ball" would involve inputting an apple exemplar into the node(s) representing the category Ball. The output, in this case, would be a representation of apples (the topic) which has been altered by the vehicle representation so that the output is more similar to the ball representation than the original exemplar was. Here is the author's description of why this alteration of the topic representation occurs:

So, there you have it, a connectionist model of metaphor implementing the attributive categorization theory. There are several obvious problems and limitations with the model. For one, it's not really doing what the attributive categorization theory says is involved in metaphor. Recall that in the attributive categorization model, the vehicle is a member of a category. The topic is placed into that category, not into the category specifically referred to by the vehicle label. For example, "My job is a jail" doesn't involve categorizing my job as a jail, but as a member of a common category, confining plances. However, in the MPC model, the topic is placed into the category referred to by the vehicle label, rather than a common category. I imagine the model could be modified to do this, but as it's described, it isn't doing what the attributive categorization theory says it should be. In addition, the model can only deal with metaphors that involve the transfer of attributes, and cannot handle any metaphors that involve the transfer of structure. This is a big problem, since research has shown that most metaphors involve structural information. In addition, the model's version of the irreversability of metaphorical comparisons is pretty weak. The model can perform comparisons in either direction, and has no way of determining which direction is better. This hints at a limitation common to most (if not all) connectionist models: it's not clear how th model fits within a larger system which, in this case, would ultimately be needed to explain the full range of metaphorical behaviors we humans exhibit. The model's simulation of metaphor is therefore uncomfortably elliptical. Finally, with the exception of the third prediction, the first two predictions are hardly novel, and are pretty much self-evident. If the model had not demonstrated these properties, it would certainly have been in trouble, but the fact that it does make these predictions is hardly a case for taking the model seriously. The third prediction is interesting, but it is also a prediction that would be made by most comparison theories, and therefore wouldn't allow us to differentiate between this model and comparison models.

The model uses a pretty normal three-level connectionist architecture (see here for a good primer on connectionism), consisting of input and output nodes, along with intervening hidden nodes. The model was trained with exemplars from three separate categories (Apple, Fork, and Ball), and the representations of these three categories are stored in the hidden units. Here is a graphic representation of the architecture:

Figure 1 from Thomas and Mareschal (1999). Click for larger view.

Given an exemplar, the model autoassociates the features that comprise the representation of the category to which the exemplar belongs. This just means that the model reproduces the features, in the form of semantic vectors, of the category as output. So, given an exemplar with a set of features, the model will determine into which category the exemplar belongs, and output the features of that category. To model metaphors, an exemplar serving as the topic of the metaphor is inputted into the node(s) representing the category which serves as the vehicle. For instance, the metaphor "An apple is a ball" would involve inputting an apple exemplar into the node(s) representing the category Ball. The output, in this case, would be a representation of apples (the topic) which has been altered by the vehicle representation so that the output is more similar to the ball representation than the original exemplar was. Here is the author's description of why this alteration of the topic representation occurs:

Pattern completion is a property of connectionist networks that derives from their non-linear processing (Rumelhart & McClelland, 1986). A network trained to respond to a given input set will still respond adequately given noisy versions of the input patterns. For example, if an autoassociator is trained to reproduce the vector <0> and is subsequently given the input <.2 .6 .2 .2>, its output is likely to be much closer to the vector it 'knows', perhaps <.0 .9 .0 .0>. An input is transformed so as to make it more consistent with the knowledge that the network has been previously trained on. The connection weights store the feature correlation information in previously experienced examples. If a partial input is presented to the network, it can use that correlation information to reconstruct the missing features.When the model is actually used, it exhibits properties of metaphors consistent with the attributive categorization theory. First, the representation of the topic and vehicle interact to determine which features of the topic are represented in the output of the metaphor. In addition, the output of the metaphorical comparison differs depending on the direction of the comparison. "An apple is a ball" will produce a representation that is different from "A ball is an apple," indicating that the model produces results consistent with the irreversability of metaphorical statements. In addition to the results consistent with the predictions of the attributive categorization theory, the model itself produces three new predictions. The first is that the smaller the range of features found in exemplars of a vehicle category, the less metaphorical comparisons involving that category as a vehicle will produce interactive effects. In other words, when a vehicle with a small range of features is used in metaphors, the features it transfers to the topic will tend to be the same regardless of what the topic is. This will also be the case when the vehicle category is highly familiar. Finally, metaphorical comparisons will involve the transfer of attributes from the vehicle to the topic that are not likely to be reported. This is because, while some features may not make sense when transferred from the vehicle to the topic, (e.g., "A ball is an apple" may transfer the feature "edible," even though very few balls are actually edible), but which are transferred anyway. As a result, Thomas & Mareschal predict that participants will be slower to questions about features which are transferred but not otherwise reported.

So, there you have it, a connectionist model of metaphor implementing the attributive categorization theory. There are several obvious problems and limitations with the model. For one, it's not really doing what the attributive categorization theory says is involved in metaphor. Recall that in the attributive categorization model, the vehicle is a member of a category. The topic is placed into that category, not into the category specifically referred to by the vehicle label. For example, "My job is a jail" doesn't involve categorizing my job as a jail, but as a member of a common category, confining plances. However, in the MPC model, the topic is placed into the category referred to by the vehicle label, rather than a common category. I imagine the model could be modified to do this, but as it's described, it isn't doing what the attributive categorization theory says it should be. In addition, the model can only deal with metaphors that involve the transfer of attributes, and cannot handle any metaphors that involve the transfer of structure. This is a big problem, since research has shown that most metaphors involve structural information. In addition, the model's version of the irreversability of metaphorical comparisons is pretty weak. The model can perform comparisons in either direction, and has no way of determining which direction is better. This hints at a limitation common to most (if not all) connectionist models: it's not clear how th model fits within a larger system which, in this case, would ultimately be needed to explain the full range of metaphorical behaviors we humans exhibit. The model's simulation of metaphor is therefore uncomfortably elliptical. Finally, with the exception of the third prediction, the first two predictions are hardly novel, and are pretty much self-evident. If the model had not demonstrated these properties, it would certainly have been in trouble, but the fact that it does make these predictions is hardly a case for taking the model seriously. The third prediction is interesting, but it is also a prediction that would be made by most comparison theories, and therefore wouldn't allow us to differentiate between this model and comparison models.

Buridan's Ass

At Oohlah's Blog-space, there are two recent posts (this one and that one) on "choice without preference" and Buridan's Ass. For those who are too lazy to click the links, here's the first of the two posts in its entirety:

Maybe Buridan's Ass is an interesting logical problem, even though it's not an interesting practical problem, and probably can't shed any light on the decision-making process (though it apparently sheds some light on Romance novels -- see here) other than that we have reasons for making decisions that go beyond the independent attractiveness of two options (duh!), but I can't imagine how it would be. For it to be even a logical problem would require that we pretty much remove all of the motivations to make the choice in the first place (a similar point for is made by Richard at Philosophy, etcetera). If it were still a problem, logical or otherwise, even given all of the constraints and other motivations that factor into a choice like this (or with them all removed), here's how I would solve it: I'd pick up both cans and then make a choice when I got to the cashier. By that time, choosing one should be more optimal than choosing the other (maybe because it's closer to me in the cart). I think that's pretty much the solution people much smarter than me have traditionally come up with (e.g., the solution of Otto Neurath, which just involved flipping a coin). As for the ass, since he probably doesn't have a shopping cart, or a coin, he's just going to have to suck it up and make a choice.

In the first chapter of Jon Elster's Solomonic Judgments, he argues that choice without preference is not an important practical issue. He contends that no one cares which of two apparently identical soup cans on the supermarket shelf is chosen. The only way a choice like this will matter is if there are differences in the two soup cans, i.e., one has more broth than the other, etc.I may be stupid, but I'm like Elster -- I just don't see a problem here. The scenario assumes that our only motivation is to purchase the can, and that there are no constraints other than preference on choice. It ignores the motivation the post mentioned (the financial motivation to only buy one can), time-constraints, motor constraints, etc., which all factor in to the optimal (which is really what "rational" is in this case) choice. If you get rid of all of those, then there's probably no need to choose a can in the first place. In fact, I bet that if you threw all of those into an Ideal Observer model, what you'd find is that the model either chooses one of the two cans all of the time (which would probably be due to the motor constraints) or choose each can 50% of the time (because the motor constraint doesn't apply). Humans, because we're not ideal observers (our behavior is suboptimal) will have all sorts of noise in our data if forced to make the choice a bunch of times, but it would probably be pretty close to the ideal observer model assuming that the initial conditions were the same every time (which would be impossible, but you get the point).

I may have missed the point, but the problem of the choice without preference is that we can choose either soup can A or soup can B and satisfy our desire for buying soup. Since both soup cans will satisfy our desire, then rationality tells us to purchase both soup cans. If we think like this, though, we could potentially become poor very quickly. (Perhaps using the example of very similar cars on a new or used car lot would bring the problem to the fore.) So, it is not rational to purchase both cans. What seems to follow is that reason tells us to do something it is not rational to do.

Have I missed the point of choice without preference, or has Elster missed an important component of this difficult problem?

Maybe Buridan's Ass is an interesting logical problem, even though it's not an interesting practical problem, and probably can't shed any light on the decision-making process (though it apparently sheds some light on Romance novels -- see here) other than that we have reasons for making decisions that go beyond the independent attractiveness of two options (duh!), but I can't imagine how it would be. For it to be even a logical problem would require that we pretty much remove all of the motivations to make the choice in the first place (a similar point for is made by Richard at Philosophy, etcetera). If it were still a problem, logical or otherwise, even given all of the constraints and other motivations that factor into a choice like this (or with them all removed), here's how I would solve it: I'd pick up both cans and then make a choice when I got to the cashier. By that time, choosing one should be more optimal than choosing the other (maybe because it's closer to me in the cart). I think that's pretty much the solution people much smarter than me have traditionally come up with (e.g., the solution of Otto Neurath, which just involved flipping a coin). As for the ass, since he probably doesn't have a shopping cart, or a coin, he's just going to have to suck it up and make a choice.

Wednesday, November 17, 2004

A Plea for More Requests

For those of you who find my political rants irksome, or worse, I just wanted to let you know that two cognition posts or in the offing, one on creative cognition and one on the cognitive psychology of humor (by request). I also want to ask (nay, beg) for more requests. In the post-election blog atmosphere, I'm having trouble coming up with ideas for posts. If there's anything you'd like to read about, let me know. (Also, I haven't forgotten the connectionism request, but I'm writing something on representations right now, and I may either post a short version of that, or summarize the ideas in it, when I'm finished.)

Tuesday, November 16, 2004

The Conservatives We Don't Want

The Anal"Philosopher" writes:

By allowing Muslims to immigrate into their countries in massive numbers, Europeans are destroying their culture and risking their lives. What idiots. And these are the people we are supposed to emulate with regard to gun ownership, capital punishment, homosexual "marriage," confiscatory taxation, and other matters? Americans must never stoop to the level of Europeans. If they do, they deserve Europe's fate.I don't know about you, but to my ear, that sounds exactly like the arguments heard in the United States during the first few decades of the twentieth century, but instead of Muslims, the arguments were directed at Italians and the Irish. It also sounds uncannily like the arguments made in the south about the effects of freeing the slaves and loosing all those dark-skinned folk on the fair, southern culture. These are the Republican voters we don't want to reach out to, and should ignore entirely. This may sound harsh, but what I think what they want is less than worthless.

Trying to Reach the Evangelicals, On Purpose

Apparently it's fashionable among liberal bloggers (and non-bloggers) to wonder out loud about how Democrats can court the evangelical vote. For instance, Lindsay Beyerstein of Majikthise writes on the topic, concluding:

Instead of focusing on evangelicals, Democrats should be focusing on the people who do sometimes vote Democratic. Some of these voters may be evangelicals, and they may even hold some of the views that the untouchable evangelicals hold, but they don't vote on these issues alone. The way to get to them is to make it clear that Democrats will do more for them, in the short-term and the long-term, than Republicans will. People like me seem to think this is obvious, but the swing voters, evangelical or not, don't see it. So, it's not as obvious as we think, and we liberals got to do a better job of expressing ourselves and getting the message out there.

My one true hope, and I think this is a genuine possibility, is that the Democratic party will become more liberal, not less so, by recognizing that the conservative moral and economic voters are out of their reach, and focusing on what differentiates us from them. When survey after survey shows that a substantial majority of Americans support universal health care, why do we de-emphasize this issue in elections? Because we think we will lose the ultra-conservative voters? We've already lost them. The more we talk about trying to win them, the further we get from what we should be talking about.

I don't know whether Democrats can find equally compelling areas of common ground with evangelical groups today. Perhaps Democrats can make common cause with evangelical groups over economic justice, peace, or other issues of mutual concern. The alliance between the religious right and the corporate right may seem inevitable in retrospect, but it too is built on a sometimes uneasy compromise. Proponents of religious alternative outreach are urging Democrats to cultivate compromise in the other direction (economic interests over cultural interests). Whether such outreach is feasible remains an empirical question.While Lindsay seems to harbor at least some hope of someday getting more evangelical votes, I do not, and to be honest, I don't want them. The evangelicals who don't already vote Democrat (and there are some; my parents make at least two) aren't the sort who are swayed by economic policies. The ones who are concerned with economic policy are likely to harbor a conservative, hyper-individualist, free market view of economic issues, and any talk of something like universal healthcare or government-funded arts or environmental programs will turn them off immediately. If we talked about gun-control, prison reform, or progressive taxation, we might cause these evangelicals to have an aneurysm. Then there are those who don't vote Democrat for moral and religious reasons. These are the people who vote based on issues like abortion, same-sex marriage, sex education, evolution, and the like. There simply is no reaching these two classes of evangelicals without compromising our core values, and as Lindsay herself says, "Compromise outreach to is morally unacceptable and politically naive."

Instead of focusing on evangelicals, Democrats should be focusing on the people who do sometimes vote Democratic. Some of these voters may be evangelicals, and they may even hold some of the views that the untouchable evangelicals hold, but they don't vote on these issues alone. The way to get to them is to make it clear that Democrats will do more for them, in the short-term and the long-term, than Republicans will. People like me seem to think this is obvious, but the swing voters, evangelical or not, don't see it. So, it's not as obvious as we think, and we liberals got to do a better job of expressing ourselves and getting the message out there.

My one true hope, and I think this is a genuine possibility, is that the Democratic party will become more liberal, not less so, by recognizing that the conservative moral and economic voters are out of their reach, and focusing on what differentiates us from them. When survey after survey shows that a substantial majority of Americans support universal health care, why do we de-emphasize this issue in elections? Because we think we will lose the ultra-conservative voters? We've already lost them. The more we talk about trying to win them, the further we get from what we should be talking about.

Philosophers' Carnival IV: Attack of the Epistemologists

The fifth Philosophers' Carnival is up at Ciceronian Review. There are some interesting posts this time around. The post from Prosthesis on science and beauty is very interesting, and reminds me of Stephen J. Gould's work towards the end of his life (leading to this book, and research at this lab). It's hard to tell whether the author of the post at Prosthesis thinks "nonempirical" factors in theory selection are bad for science, but it's clear that he/she thinks they are unavoidable. I like Gould's take, in which nonempirical (most notably aesthetic) factors are not only inevitable, but desirable. Science is about understanding the world around us, after all, and aesthetic considerations can facilitate understanding.

Blowhard has a very good introduction to Stephen Toulmin, whom I only recently really discovered, so I found the post helpful.

Siris, which continues to be one of my favorite blogs, has a good post on Shepherd on causation. I don't really know anything about Shepherd, and I've only really begun to think seriously about causation, so this post was definitely edifying for me.

I also liked the post at Evolving Thoughts on knowledge of the past in science. The issues discussed in the post don't really come up in my own work as problems with scientific knowledge, but similar issues are raised when trying to verify the accuracy of memories. For instance, in the debate over recovered memories, what evidence constitutes verification of the accuracy of the content of recovered memories? Unlike in the sciences, immediate certainty is important here, because the future discovery of new evidence may come too late. For instance, when recovered memories are used as the primary evidence in criminal cases against accused child molestors, we can't wait until after the trial to decide whether the recovered memories are accurate.

There are several other interesting posts in this edition of the Carnival as well, so go check them out.

Blowhard has a very good introduction to Stephen Toulmin, whom I only recently really discovered, so I found the post helpful.

Siris, which continues to be one of my favorite blogs, has a good post on Shepherd on causation. I don't really know anything about Shepherd, and I've only really begun to think seriously about causation, so this post was definitely edifying for me.

I also liked the post at Evolving Thoughts on knowledge of the past in science. The issues discussed in the post don't really come up in my own work as problems with scientific knowledge, but similar issues are raised when trying to verify the accuracy of memories. For instance, in the debate over recovered memories, what evidence constitutes verification of the accuracy of the content of recovered memories? Unlike in the sciences, immediate certainty is important here, because the future discovery of new evidence may come too late. For instance, when recovered memories are used as the primary evidence in criminal cases against accused child molestors, we can't wait until after the trial to decide whether the recovered memories are accurate.

There are several other interesting posts in this edition of the Carnival as well, so go check them out.

Monday, November 15, 2004

Concepts of "Human" Among American Undergraduates

I've been reading a lot of literature on the creative cognition approach lately (expect a post soon), and came across something interesting, though it's not really about creative cognition. In an experiment by Ward et al., participants (98 undergraduates in an introductory psychology course) were asked to list properties of humans that distinguish them from animals. Here are the most common categories of properties listed, along with the percentage of participants who listed them (taken from Table 1 in Ward, et al., p.1390):

How do their concepts of "human" fit with yours? I think it's interesting that "consciousness" was only listed by 8% of the participants, when it's played such a large role in intellectual debates about "humanness."

Communication 72

Mental ability 68

Physical features 42

Technical/manipulative 33

Emotions 32

Socioeconomic institutions 25

Morality/religion 22

Sex/reproduction 20

Clothing 13

Instinct 15

Family 12

Lifespan 11

Consciousness 8

Dominance 7

Creativity 6

How do their concepts of "human" fit with yours? I think it's interesting that "consciousness" was only listed by 8% of the participants, when it's played such a large role in intellectual debates about "humanness."

Sunday, November 14, 2004

Cognitive Science and Literary Criticism

A while back I discovered a very interesting, and perhaps even important (though, at least in the cognitive sciences, almost completely unread) paper by Herbert Simon, one of the few psychologists to have won the Nobel Prize. The paper, which can be found here along with several commentaries, attempts to "bridge the gap" between cognitive science and literay criticism. The essay focuses primarily on meaning, because literary criticism is a practice primarily aimed at analyzing the meanings of texts. His account of meaning is clearly impoverished. It revolves around memory retrieval. Here are two paragraphs from the paper that present the gist of what Simon has to say about meaning:

Then, a little later, he adds:

And to complete the summary:

I know what you're thinking: how does Chris think cognitive science can benefit literary criticism? Excellent question. The most obvious way is by developing an understanding of the cognitive processes involved in reading. In addition, the elucidation of the cognitive processes underlying literary techniques, like metaphor, imagery, and the construction of representations can benefit the study of texts. Finally, cognitive scientists can help critics to understand creative cognition. How are novel representations produced? How do we create entirely fictional worlds out of the material of the factual world? None of these things is designed to give literary critics a single, all-encompassing view of meaning with which they can interpret all texts. There may not be such a theory, and if there is, cognitive science isn't yet equipped to give it. Still, each of those things plays a role in meaning construction, in the minds of both authors and readers.

Anyway, I recommend checking out the paper, and the commentaries, if this is the sort of thing that interests you. Simon is not much of a writer, but he's very insightful, and even when he's wrong, he still raises important issues.

Meanings are evoked. When a reader attends to words in a text, certain symbols or symbol structures that are stored in that reader's memory come to awareness. (In psychology we might say, more ponderously, "having been noticed, the symbols are activated or transferred from long-term to short-term or immediate memory"). This is the sense in which we will use the term "evoke" throughout this paper. It denotes a specific set of psychological processes that have been much studied in the laboratory and in everyday life: the processes that bring meanings, or components of meaning, into attention.

The process that underlies evocation is recognition. Words in the text serve as cues. Being familiar (if they are not familiar, they will not convey meaning), they are recognized, and the act of recognition gives access to some of the information that has been stored in association with them--their meaning (Feigenbaum and Simon, 1984). Recognizing a word has the same effect as recognizing anything else (a friend on the street). Recognition accesses meaning.

Then, a little later, he adds:

The meaning of the text, then, will be a function of the memory contents that are accessed by recognition of words. Which of the whole collection of memory contents will be accessed depends on context, that is upon what contents are both associated directly or indirectly with the word recognized and also the extent to which they have recently been activated.

And to complete the summary:

I agree with Simon that literary criticism can benefit from a deeper connection with cognitive science (though I'm not so convinced the relationship can be symmetrical), but I also agree with some of the commentators (this one and this one, for istance) that Simon's conception of meaning isn't going to to anyone, much less literary critics, much good. If it were only that his description of the cognitive scientific view of meaning was an oversimplification, I think that would be OK. It's a short paper, and cognitive science has a lot to say about memory. However, I think oversimplicity is not the only problem. The paper's account of meaning is just wrong. It's wrong in how it describes memory (which is probably more case-based, more reconstructive, and much less encyclopedic than he would lead us to believe), and I think he pays far too little attention (none, in most cases) to things like inference, imagery, layers of meaning, and the role of creativity in extending meaning. Still, with all its flaws, I think the aim of the paper is a good one.Thus, the evocation of certain symbols may evoke others by the chain reaction that we call mental association. The burst of evocation that a bit of text may induce is limited only by the richness and complexity of the memory structures that it activates. The more elaborate the structures that are evoked, the more the meaning to the reader is defined by the reader's memory, the less by the author's words. The meaning of text is determined by a relation between the text itself and the current state of the memory of the reader, its contents, and its state of activation. (emphasis added)